Introduction

Web3 & Blockchain Consultancy :

Implementing Data Tokenization – Steps and Strategies for Success

Data has become an invaluable asset for companies, which underscores the critical need of placing strong security measures to keep this data secure. In 2022, America experienced 1,802 cases of data compromise, impacting 422 million individuals. As data breaches become increasingly common, maintaining data privacy remains a top challenge for leaders in data management. To counter these security threats, many organizations are adopting data tokenization. This strategy involves substituting sensitive data with non-sensitive placeholders to prevent unauthorized access. This article takes a closer look into the concept of data tokenization, explaining its mechanism and functionality. We’ll also examine typical applications of data tokenization and discuss how it stands apart from encryption.Key Takeaways

- Implementing data tokenization is a strategic decision that significantly enhances data security and privacy for organizations.

- Data tokenization helps protect sensitive information from unauthorized access, reducing the risk of data breaches.

- Utilizing data tokenization tools ensures that organizations can meet stringent regulatory requirements effectively.

- Tokenization allows businesses to maintain the integrity and usability of their data in secure environments.

- Integrating blockchain development solutions, such as those offered by BloxBytes, with data tokenization strategies can further enhance security and compliance, offering robust protection for digital assets.

What is Data Tokenization?

Data tokenization is the process of converting sensitive information into a unique token or identifier while keeping its essential properties and linking to the original data. This token represents the real data and allows its use across various systems and processes without revealing the sensitive information itself. Because sensitive data remains protected even if the token falls into the wrong hands, tokenization is often used as a security measure to preserve data privacy. The actual sensitive data stays safe, and the token can be utilized for legitimate purposes, such as data analysis, storage, or sharing. In a protected environment, a token can briefly reconnect to the original data during a transaction or operation. This setup prevents sensitive details from being disclosed while letting authorized systems or applications verify and process the tokenized data.The Purpose of Data Tokenization

The primary aim of data tokenization is to boost data security and privacy. By replacing sensitive information with unique tokens, it shields this data from unauthorized access, reducing the likelihood of data breaches and lessening the impact of any security incidents. Here are some key purposes of data tokenization:-

Data Protection

Tokenization of data shields sensitive information, such as credit card details, social security numbers, and personal identifiers, from being stored or transmitted in its original form. This reduces the risk of unauthorized access or data breaches and minimizes the exposure of sensitive information.

-

Compliance

Tokenization aids companies in adhering to industry standards like the Payment Card Industry Data Security Standard (PCI DSS). By tokenizing sensitive data, businesses can handle less sensitive information directly and narrow the scope of compliance audits, thereby enhancing the efficiency of compliance management.

-

Reduced Risk

The risk associated with storing and transmitting sensitive data is lowered through tokenization of data. Tokens themselves are of no value without access to the tokenization system or database, rendering them useless if intercepted by unauthorized individuals. -

Simplified Data Handling

Tokens can replace sensitive data in various operations such as transaction processing, data analysis, or storage. Since tokens can be processed and managed just like the original data without needing decryption or revealing sensitive details, this simplifies data handling procedures. -

Data Integrity

Tokenization maintains the integrity and format of the original data by replacing it with tokens. Consequently, tokenized data can be seamlessly utilized by authorized systems and applications, as the tokens retain essential properties of the original data without any loss.

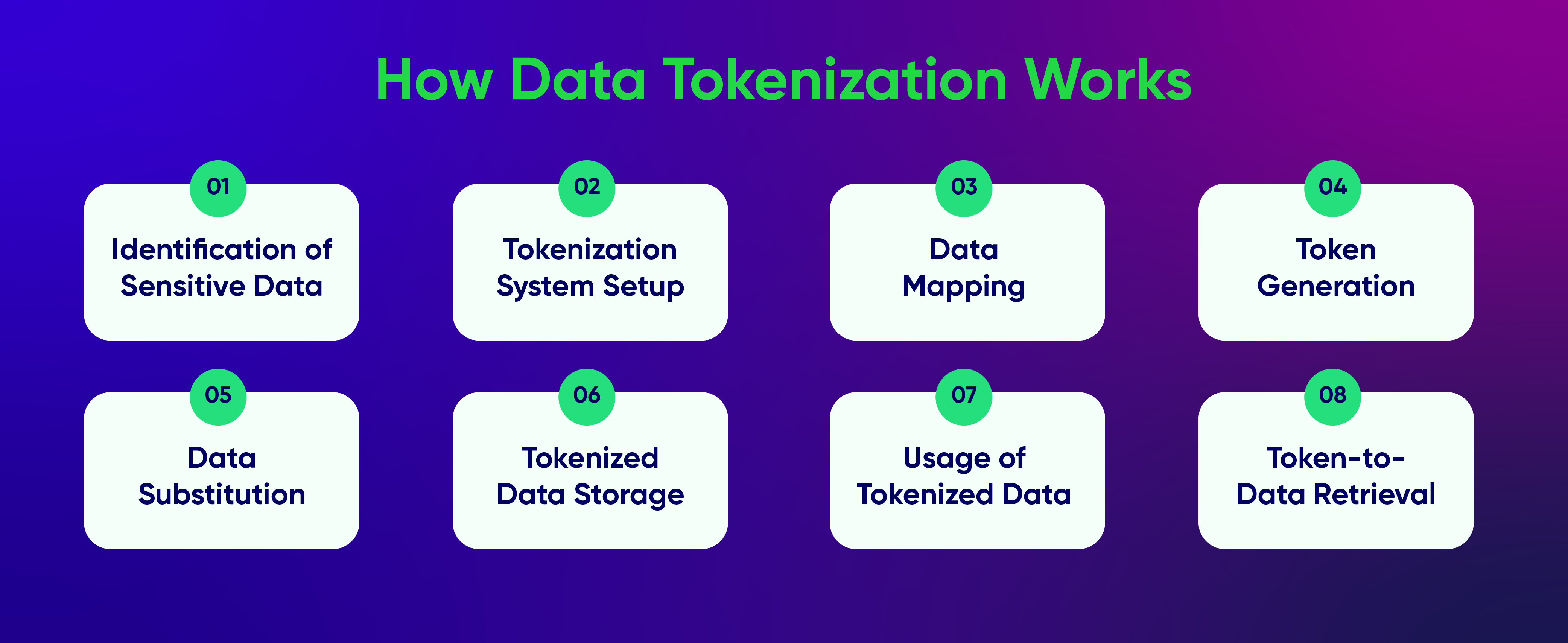

How Data Tokenization Works

Data tokenization transforms sensitive information into tokens while preserving its value and linking to the original data. Here’s a step-by-step explanation of how it functions:

- Identification of Sensitive Data: First, identify the sensitive data that needs tokenization, such as credit card numbers, social security numbers, or personal identifiers.

- Tokenization System Setup: A tokenization system is established to oversee the tokenization process. This system often includes secure databases, encryption methods, and algorithms to generate and manage tokens.

- Data Mapping: A mapping table or database is created to associate sensitive data with its corresponding tokens. This ensures the connection between the original and tokenized data is securely maintained.

- Token Generation: The system generates a unique token to replace the sensitive data. This token is usually a random sequence of numbers or letters.

- Data Substitution: The generated token replaces the sensitive data, which can be done in batches or in real-time during data entry.

- Tokenized Data Storage: Tokenized data, along with any related metadata, is securely stored in a tokenization database. This setup ensures that even if the tokenized data is accessed improperly, it cannot be used to retrieve the original sensitive data, as the sensitive data is not stored in its original form.

- Usage of Tokenized Data: Authorized systems or applications use tokens instead of the original sensitive data for processing. The tokens circulate within the system for tasks such as transactions, analysis, or storage.

- Token-to-Data Retrieval: If there is a need to access the original data associated with a token, the tokenization system uses the mapping table or database to retrieve the relevant sensitive data.

Techniques for Tokenization of Data

Here’s a breakdown of common data tokenization methods:- Format-Preserving Tokenization: This approach generates tokens that mimic the original data’s structure and length. For instance, a tokenized version of a 16-digit credit card number would also be a 16-digit number.

- Secure Hash Tokenization: Tokens are generated using a secure one-way hash function, like SHA-256. It’s nearly impossible to reconstruct the original data from these tokens because the hash function converts the original data into a fixed-length string.

- Randomized Tokenization: This technique involves using completely random tokens that don’t relate to the original data. These tokens are stored securely in a tokenization system where they can be accurately linked back to the original data.

- Split Tokenization: This method divides sensitive data into parts and tokenizes each segment separately. Distributing the tokenized pieces across different systems or locations enhances security.

- Cryptographic Tokenization: This technique is a mix of tokenization and encryption. It involves encrypting the sensitive data using a strong algorithm and then tokenizing the encrypted data’s value. The encryption key is kept secure to ensure it can be decrypted when needed.

- Detokenization: This is the reverse process where the original data is retrieved from the token. It requires securely storing and managing the mapping from the token back to the data.

Benefits of Data Tokenization

Data tokenization provides several advantages for businesses aiming to boost data security and privacy:-

Enhanced Data Security

Tokenization replaces sensitive information with tokens, reducing the chance of unauthorized access and data breaches. Tokens are useless to attackers if intercepted because they require access to the tokenization system to be meaningful. -

Regulatory Compliance

Tokenization helps businesses meet data protection standards and regulatory requirements. For example, it aids compliance with the PCI DSS by limiting the storage of sensitive data and reducing the risks associated with handling payment card information. -

Maintained Data Integrity

Tokenization preserves the structure and integrity of the original data. Because tokens retain certain characteristics of the original data, authorized systems can process tokenized data without compromising its accuracy or integrity. -

Streamlined Data Handling

Tokenization allows tokens to be used in place of original data, simplifying data management. This makes data operations more efficient, as tokens can be handled and maintained by authorized systems without the need for decryption or exposing sensitive information. -

Reduced Risk

By tokenizing sensitive data, businesses lessen the risk associated with storing and transferring it. The risk of data breaches is significantly lowered because tokens, by themselves, hold no value and cannot be utilized for unauthorized purposes. -

Scalability and Flexibility

Tokenization is a flexible solution that can be applied to various types of sensitive data and integrated across multiple platforms and applications, allowing it to scale as business needs evolve. -

Boosted Customer Trust

Tokenization enhances customer confidence by demonstrating a commitment to data protection. Customers are more likely to trust companies with their information if they know their sensitive data is protected through tokenization. -

Decreased Compliance Burden

Tokenization can simplify the process of compliance audits and the protection of sensitive data. By reducing the volume of sensitive data stored, it narrows the scope of compliance assessments and audits, making the process less burdensome.

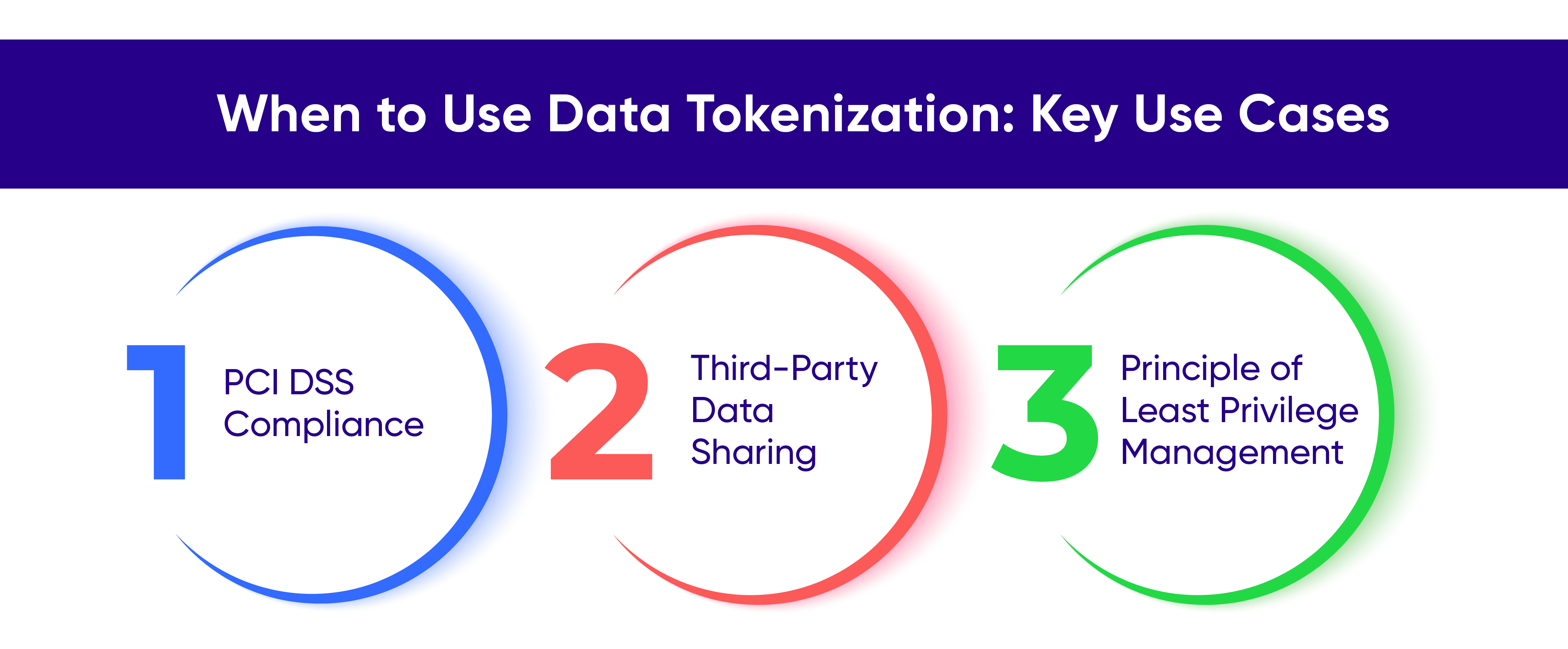

When to Use Data Tokenization: Key Use Cases

Data tokenization is not only crucial for enhancing security in online payments but also proves beneficial in various scenarios:

PCI DSS Compliance

Any organization that deals with credit card information for processing, storing, or transmitting must adhere to the Payment Card Industry Security Standard (PCI DSS) to ensure data is handled securely. Tokenization meets these requirements because tokens are generally not covered by the strict regulations of PCI DSS 3.2.1, assuming the tokenization process is sufficiently separate from the applications using the tokens. This can significantly reduce the time and effort involved in administrative tasks.Third-Party Data Sharing

When sharing data with third parties, using tokenized data instead of sensitive data removes the typical risks associated with giving external entities control over such information. Additionally, tokenization helps organizations avoid compliance obligations that might arise when data is shared across different regions and systems, such as under data localization laws like the GDPR.Principle of Least Privilege Management

The principle of least privilege ensures individuals access only the data necessary for their specific tasks. Tokenization can facilitate this by granting least-privileged access to sensitive data. In environments where data is pooled together, such as in a data lake or data mesh, tokenization ensures that only those with the right access can revert the tokens to the original data. Tokenization is also valuable for enabling the use of sensitive data in other activities, such as data analysis, and for addressing potential threats identified during risk assessments or through threat modeling.Potential Risks of Data Tokenization

While data tokenization brings several benefits, there are also risks and considerations that organizations need to be aware of. Here are some challenges associated with data tokenization:-

Vulnerabilities in the Tokenization System

The security of the tokenization system is crucial. If the system is compromised, attackers could potentially reverse-engineer the tokens to access private data, leading to unauthorized use of tokenized data or access to the mapping table. -

Reliance on Tokenization Infrastructure

As tokenization systems become more common, many organizations may become dependent on them. Any disruptions or outages in the tokenization system could affect operations that depend on tokenized data.

-

Data Conversion Challenges

Implementing tokenization might require modifications to existing applications and systems to accommodate tokens. Organizations need to consider the effort and potential challenges involved in adapting existing data and systems for tokenization. -

Tokenization Limitations

Tokenization may not be suitable for all data types or scenarios. For example, data with complex relationships or structured data required for specific processes may pose difficulties in tokenization implementation. -

Complexity of Implementation

Developing a robust tokenization system involves careful planning and integration with existing systems and processes, adding complexity. Managing mapping tables, securely storing tokens and sensitive data, and ensuring proper token generation can all increase this complexity. -

Regulatory Concerns

While tokenization can aid in compliance, organizations need to ensure that their tokenization practices conform to all relevant regulations. Understanding the legal and regulatory implications of tokenization is essential to avoid penalties or compliance issues. -

Key Management in Tokenization

Effective key management is critical for secure tokenization. Organizations must ensure that encryption keys used in the tokenization process are properly generated, stored, and managed. Poor key management practices can compromise the security of tokens and the sensitive data they protect. -

Integrity of Token-to-Data Mapping

Maintaining the accuracy and reliability of the token-to-data mapping table or database is vital. Any errors or inconsistencies in the mapping can lead to data integrity issues or difficulties in retrieving the original data when necessary.

Data Tokenization vs. Data Encryption

Data tokenization and data encryption are two fundamental security techniques used to protect sensitive information. While both methods aim to safeguard data, they operate differently and serve unique purposes within an organization’s data security strategy.Data Tokenization

Data tokenization involves replacing sensitive data elements with non-sensitive equivalents, known as tokens. These tokens retain no usable data but maintain a format similar to the original input. The original data is stored securely in a token vault, and the tokens are used in place of real data within business processes. This approach is particularly useful in environments where maintaining the format of the data is essential but the original data is not needed for processing.Data Encryption

Data encryption transforms data into a secure format that can only be read or processed after it has been decrypted, which requires a decryption key. This method encrypts data at rest, in transit, or in use, ensuring that even if the data is intercepted, it cannot be understood without the corresponding key. Encryption is widely used across different industries for securing communications and data storage, providing a high level of security for sensitive information.

Feature |

Data Tokenization |

Data Encryption |

| Accessibility | Tokenized data can be used directly in business processes without needing to revert to its original form. | Encrypted data must be decrypted before it can be used in business processes. |

| Reversibility | Original data can be retrieved by reversing the tokenization process through a secure lookup. | Original data is retrieved by decrypting it using a specific key. |

| Performance Impact | Generally has a lower impact on system performance since it doesn’t require intensive computational resources. | Can require significant computational resources, impacting system performance due to the need for data encryption and decryption. |

| Compliance Scope | Reduces the scope of compliance by minimizing the exposure of sensitive data. | Maintains data within the scope of compliance mandates but secures it through encryption. |

| Use Case | Suited for environments needing to maintain data format without using real data, such as in processing transactions. | Ideal for securing data across various states (at rest, in transit, or in use) in environments where data security is paramount. |

Data Tokenization vs. Masking

Data tokenization and data masking are two commonly used methods for protecting sensitive data. While both approaches aim to secure information, they differ significantly in their processes and outcomes.Data Tokenization

Data tokenization involves replacing sensitive data with non-sensitive equivalents called tokens. These tokens can be used in the operational environment without revealing the original sensitive data. The key aspect of tokenization is that it maintains a reference to the original data through a secure mapping system, which is typically stored in a separate location. This allows the data to be retrieved or de-tokenized when necessary. Tokenization is highly effective in environments where data needs to be utilized for transactions while still protecting its confidentiality.Data Masking

Data masking, on the other hand, involves altering the original data in a way that the true values are hidden. This is achieved by obscuring each sensitive data element to prevent exposure of the original data values to unauthorized parties. Unlike tokenization, masking is usually irreversible; once data is masked, it cannot be returned to its original form. Masking is used primarily in non-production environments, such as in testing and development, where there is a need to utilize realistic datasets without risking the exposure of sensitive information.

Feature |

Data Tokenization |

Data Masking |

| Reversibility | Reversible. Original data can be retrieved through de-tokenization. | Irreversible. Once data is masked, it cannot be reversed to its original form. |

| Use Case | Ideal for environments requiring the use of real data for operational purposes such as transaction processing. | Best suited for non-production environments like testing and development where real data is not necessary. |

| Security | Tokens can be used in operational environments without revealing sensitive data, maintaining strong security. | Provides strong security by obscuring data to prevent unauthorized access to sensitive information. |

| Data Integrity | Maintains a functional link to the original data, preserving integrity for certain operations. | Often distorts or removes details, which might not preserve the functional characteristics of the original data. |

| Operational Usability | High. Tokens can replace sensitive data in operations without risking exposure. | Limited. Masked data is typically used where operational functionality of the original data is not required. |

Data Tokenization Tools

Various data tokenization solutions are available in the market, helping organizations enhance their data security. Here are some notable data tokenization tools:- IBM Guardium Data Protection: This data security platform includes data tokenization among its comprehensive data protection features. It utilizes machine learning to detect unusual activities related to private data in databases, data warehouses, and other structured data environments.

- Protegrity: Protegrity offers a robust data protection platform that features data tokenization. It provides flexible tokenization methods and advanced encryption technologies to protect sensitive data.

- TokenEx: TokenEx is a dedicated tokenization platform that helps organizations secure sensitive data by replacing it with non-sensitive tokens. It offers various tokenization options and strong security measures.

- Voltage SecureData: This data-centric security solution offers data tokenization capabilities, allowing organizations to tokenize data at the field or file level, thus enhancing data security.

- Proteus Tokenization: Proteus Tokenization provides a solution that allows companies to tokenize and safeguard private data across multiple databases and systems. It features centralized management and oversight of tokenization processes.